- Aditya's Newsletter

- Posts

- Context Engineering in AI

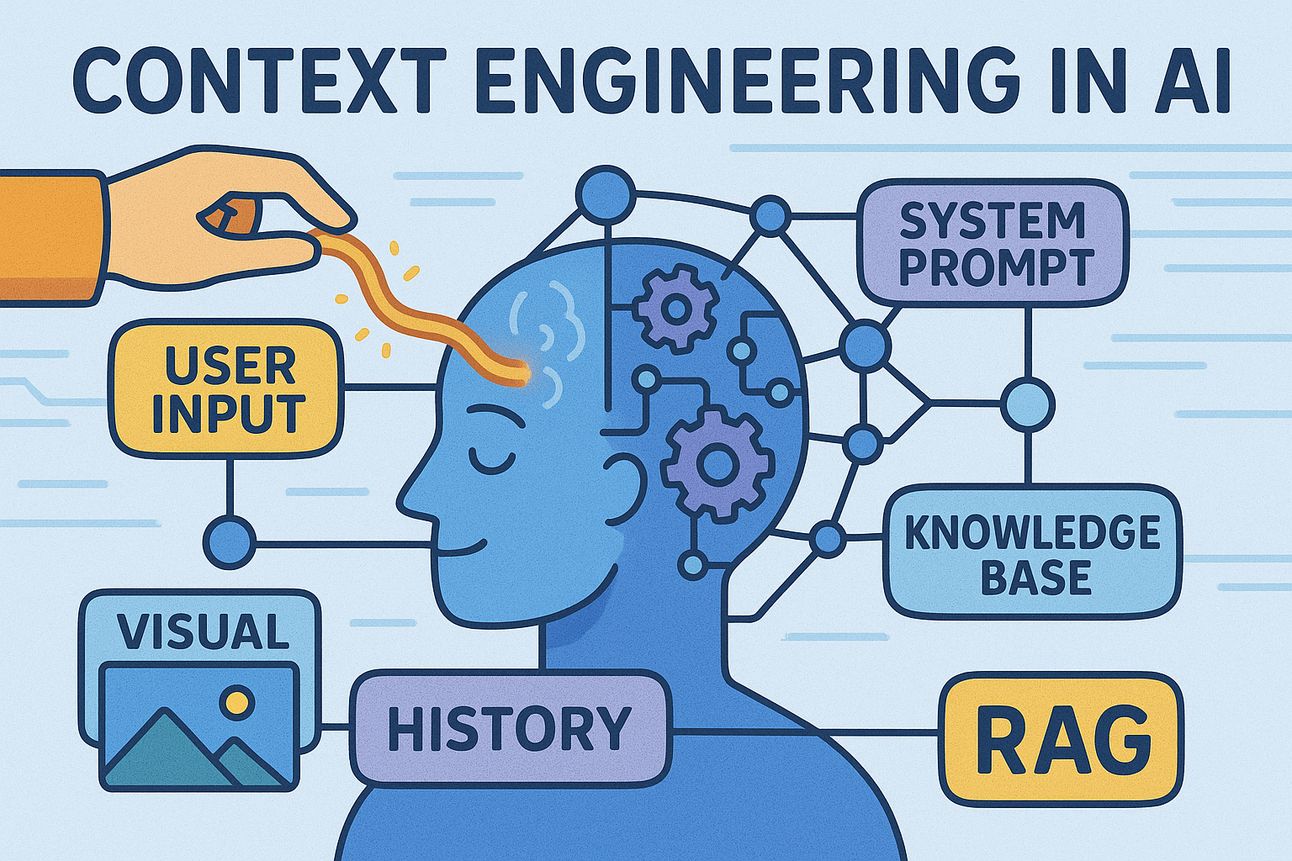

Context Engineering in AI

The new AI buzzword

We are now a community of 253! Thank you❤️

This newsletter is free and I don’t use paid advertising. I completely rely on organic growth through users who like my content and share it.

So, if you like today’s edition, please take a moment to share this newsletter on social media or forward this email to someone you know.

If this email was forwarded to you, you can subscribe here.

If you want to create a newsletter with Beehiiv, you can sign up here.

It is unclear who coined the term “context engineering” but the concept has been in existence for decades and has seen significant implementation in the last couple of years. All AI companies, without exception, have been working on context engineering, whether they officially use the term or not.

What is context engineering?

Before I even give my definition of context engineering, let me disappoint you a bit.

All prompts are context and all context is a prompt.

This is true. The words are interchangeable. People might use them to mean different things but technically speaking, every prompt is a blob of text sent to an LLM. If you attach files or a knowledge base to your prompt as ‘context’, it still is, at the end, a blob of text or a ‘prompt’.

For a model, it is all a string of text. It doesn’t matter whether you call it ‘prompt’ or ‘context’ or input or something else.

So, does that mean ‘prompt engineering’ and ‘context engineering’ are the same?

You can take whatever definition you want or call them the same if you want to but here’s how I draw the lines:

Prompt Engineering: The process of crafting prompts to get the desired output from an LLM. Here, ‘prompts’ refer to the most immediate user-crafted instructions given to an LLM.

Context Engineering: The designing of systems to organize context optimally for an LLM to solve a problem better. Here, ‘context’ refers to all the information given to an LLM including the system prompt, the user prompt, history, knowledge base, RAG, and more.

The output of context engineering is again a prompt. Hence I proposed my initial premise that the terms can be used interchangeably.

But to simplify, the general trends have gone this way -

The most immediate user input to an LLM is a ‘prompt’

The entire information given to an LLM in a call is the ‘context’

Therefore, the designing of the prompt and the context are two different things.

Context engineering is emerging as a much broader field that involves not only entering a well-structured prompt by the user but also giving the right information in the right size to an LLM to get the best output.

Why is it suddenly trending?

Did you find this article interesting? Read the complete version on my Medium.

Finally, a powerful CRM—made simple.

Attio is the AI-native CRM built to scale your company from seed stage to category leader. Powerful, flexible, and intuitive to use, Attio is the CRM for the next-generation of teams.

Sync your email and calendar, and Attio instantly builds your CRM—enriching every company, contact, and interaction with actionable insights in seconds.

With Attio, AI isn’t just a feature—it’s the foundation.

Instantly find and route leads with research agents

Get real-time AI insights during customer conversations

Build AI automations for your most complex workflows

Join fast growing teams like Flatfile, Replicate, Modal, and more.

Did you like today’s newsletter? Feel free to reply to this mail.

This newsletter is free but you can support me here.

Reply